Binqbu2002:Fault-tolerant1

Contents

- 1 Design Requirement

- 2 Basic Idea

- 3 Next Week's Plan

Design Requirement

D5.1 Requirement

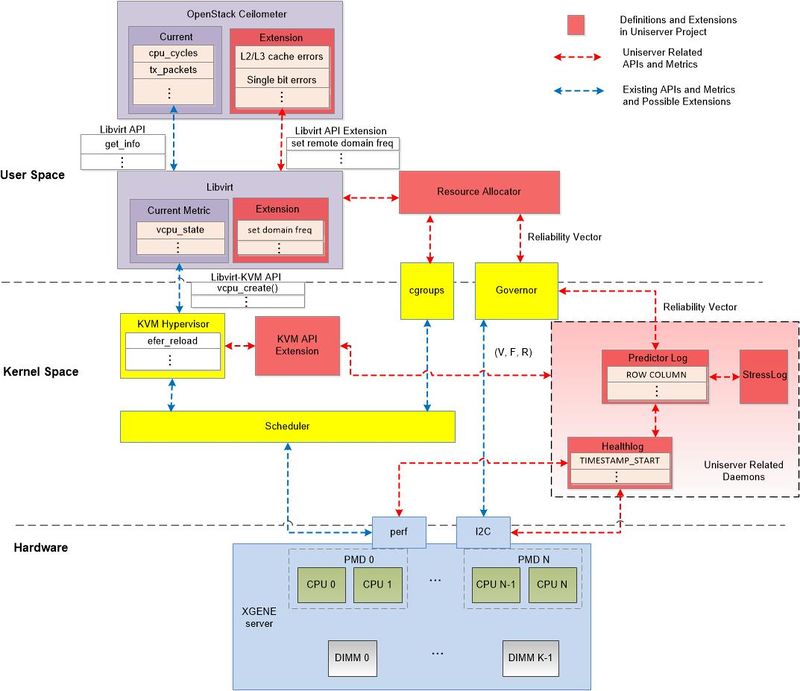

- Figure 1: Potential exchange of information across system layers

Figure 1, depicts the different layers of the Uniserver ecosystem and visualizes the flow of the information between them through the essential components. One of the most fundamental assumptions in the system architecture of the Uniserver platform is that each CPU and DRAM DIMM may have intrinsically different capabilities. As explained in the deliverables D3.1 and D4.1 in the X-GENE2 the chassis of the Uniserver the CPU Voltage/Frequency can be set separately on each PMD (consisting of 2 CPUs and each of 2 levels of cache L1/L2) and the DRAM refresh-rate/Voltage/Frequency can be set per channel or per DIMM. As explained in the deliverable D4.1 the configuration values such as Voltage, Frequency and Refresh rate of the system and their subcomponents can be controlled by accessing the appropriate hardware sensor register and ACPI state register through the hardware registers and software (e.g. i2c for Linux) available on the X-Gene microserver. Power and thermal of different hardware components such as DRAM, DIMMs, SoC and PMD can be recorded in the X-Gene microserver through the available thermal and power sensors. At the hypervisor layer, HW metrics related mainly to power and performance are already monitored such as CPU and memory utilization, cache misses etc. by utilizing an existing API. In Uniserver we plan to enhance such an API to make it able to collect and monitor Reliability related information by interacting with the introduced daemons. For example, CPU errors could be acquired from Healthlog to Monitor CPU module through new functions that are explained in Section 3 (e.g. get_healthlog).

We would like to note here that the hypervisor (i.e. KVM) is a module within the kernel space along with other modules of the Operating System such as the scheduler, governors, cgroups etc. which are used to manage and direct the overall system operation. The hypervisor is responsible for creating and running one or more virtual machines (VMs) on the so called guest machines (i.e. in the userspace). In general, in Linux, the used Operating System (OS) in Uniserver that the memory is divided into two distinct the user space and kernel space. The user space, is a set of locations where normal user processes run (i.e. everything other than the kernel). The role of the OS kernel is to manage applications running in this space from messing with each other, and the machine. The kernel space, is the location where the code of the kernel is stored, and executes under. Processes running under the user space have access only to a limited part of memory, whereas the kernel has access to all of the memory. Processes running in user space also don't have access to the kernel space. User space processes can only access a small part of the kernel via an interface exposed by the kernel, the system calls.

- For more details, please see the deliverable:1st Report on Hypervisor / System / Software Interface, deliverable 2016.11

Previous Discussion

Redirect the Hypervisor to Reliable Domain in Memory

Have a reliable hardware domain (memory and cores operate in nominal conditions) and map data and any hypervisor daemon threads on that domain

* This means no memory or CPU errors are possible for these data and threads * Motivated by the fact that typically hypervisor data size is minimal compared to VM/application data * Note: errors are still possible by relaxed CPU cores executing in hypervisor mode to serve VMs

Identify data structures with a liveness time less than the retention or refresh time of DRAM

* This relaxes the requirement to have all hypervisor data on reliable memory by having liveness, retention and refresh aware allocation.

- Is it worth it? Are there some sizeable data structures to justify this approach, for example, large buffer caches for (file) I/O?

* Control dynamically the reliability of CPU cores depending on execution mode. * Attempt to avoid CPU errors in hypervisor execution by reverting to nomimal margins for relaxed cores.

- Is this viable? Especially challenging is to verify that a core has been set to reliable from relaxed execution.

* What is the latency of this approach on the target platform? * Split hypervisor services to a reliable server and relaxed clients issuing service calls. * Similar to the FTXen approach for hypercall proxy and agents. How to apply this on KVM? * Particularly challenging, given that KVM has an inherently symmetric architecture (with the exception of the IOthread). * Replication and forwarding of VM state changing operations to the replica (the approach followed by VMware). * Can this approach ever be energy efficient? * However, what if this approach is ANYWAY followed in the datacenter (to maintain a hot standby VM) ? Is this often the case? I recall of a talk by Valentina Salapura claiming so.

Backup and Recovery using Checkpoint

Checkpoint and restart at the hypervisor level

* Assumes the checkpoint and restart services of the hypervisor are reliable (use techniques from previous section) * Take regular (how often?) checkpoints of hypervisor state. * In case of an unrecoverable error, restart the hypervisor (and failed VMs?)

Profiling of the memory structures by lifetime, sensitivity to errors and callsite (thus type of objects) can help distinguish between data which should be checkpointed and data that may not need to.

* Just restart the system, if applications are stateless (Could the filesystem be a problem, even in this case?) * For both cases described above, what is the cost of restarting? A full system reboot? * Does the platform support anything less intrusive? Can individual cores be reset? * If it does we should think whether the risk of having latent fault effects is worth it. * If it does not, is it possible to - at least temporarily - continue execution with less cores? (without the ones that suffered the fault)

For More information please see T5.2: Design and implementation of an efficient, fault-tolerant hypervisor

Basic Idea

In this section, I hope to propose a full protection solution for hypervisor. To backup hypervisor and reovery it, our snapshot should record hypervisor state and VM state with the lowest record consumption. In details, a hypervisor corruption including the following cases with memory errors (1/double bit flip) and CPU errors (CPU voltage/frequency/cache interrupt). In my previous work (2016.10-2017.2) I simulate error injection to the QEMU hypervisor and trace the impact. To backup hypervisor data, we should get familar with the which kind of data should be backuped in the QEMU. In this Wiki, my approach does not target to a definited data structure or state, but our target is to achieve selective backup and recovery using snapshot (checkpoint). Basically, I catalog the data of a running hypervisor with the following, where based on the different data class, I propose different data protection solution:

Overview

- Static data structure: This kind of data structure includes most useful files (.c and .o files) in QEMU src project. For example, cpus.c, cpus.o target-i386/cpu.h. To protect such data is quite simple, we just set checkpoint to check state of a normal file system (regarding QEMU src code is a file system) and recover the corruption with a history record (roll back from a history state or initialization).

- QEMU dnamic data: The dynamic data includes too many things. For example, in-memory data structure loading from the QEMU at a snapshot state. Another example of dynamic data is the instruction/syscall/hypercall state. These data varies with the time slot. Finally, the process and pthread state of different VM could also be regarded as dynamic data. To backup such data, we should consider a coarse apporah: backup QEMU revelent memory as mirror memory. In Babis work, we have already exploited a reliable domain by changing memory layout of Linux. Thus, we can consider the most direct apporach that sets mirror memory in the reliable zone of a memory space and achieve instruction level copy-on-write with snapshot. And my Ph.D's work focuses on the mirror memory and recovery. At that time, I proposed that we should modify the memory layout for mirror memory.

- VM image and running state: VM image and running state is commonly seen in hypervisor HA solution. To Uniserver project, we should guarantee each VM's running state is not impacted by errors and without any VM level restart. In this case, I hope to achieve Colo-based snapshot and live migration. The work COlO comes from my Ph.D's project. It achieves the least migration overhead than the previous approach.

- QEMU working state: QEMU working state could be regarded a special dynamic data in QEMU. Also, we can use mirror memory to backup and recover it. But the working state is usually the first data loss with the errors. QEMU working state includes: TB information in Code Cache. Each TB has its own TB chains. Our solution (snapshot) hopes to backup TB state and recover it.

Record and Recover Static Data Structure from QEMU

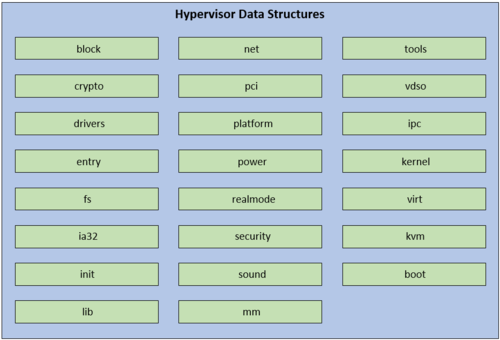

- Figure 2: Recover hypervisor data structure

Figure 2 lists the useful QEMU data structure. Accordingly, we focus on providing snapshot and roll back functions to QEMU file system based on correctable and uncorrectable errors (UTH). If errors trigger the interrupt or exception of QEMU. To an optimistic case, our fault-tolerant module could revert QEMU to the last good configuration after an error. However, if no good configuration was found, fault-tolerant module will revert QEMU to the default safe configuration after an error.

To achieve backuping static data structure, we should solve two challenges:

- Backup the whole QEMU system is too large. Could we choose the most sensetive data to protect? For example, most useful data structure corresponding with VM running (vl.o, cpus.o, target-XXX/excp-helper.o ...).

- Do we need to protect static data structures? Because the data and its configuration are static, if it happens corruption, we only install a new one and recovery the configuation. Thus, we should estimate the overhead of the two (recovery the sensetive data structure vs. reinstall QEMU and recover the configuration).

Using Mirror Memory to Replicate QEMU Dynamic Data

Basic mechanism

In our previous work, we sucessfully partition memory layout as: reliable zone, normal zone and DMA zone. Thus, based on this work, we can set original memory in the normal zone, and the mirror memory in the DMA zone. The key idea is: when memory management module (MMU) allocates memory space for a process, it creates the mirror space for the same process. We hope to guarantee the write synchronization using binary translation technique.

The process of creation mirror space:

- When an OS is initialized, a block of physical memory space is reserved as a mirror area in the reliable zone. The size of this area is the same as the memory size to the original memory (this can be implemented with malloc(), kmalloc(), etc.).

- We modify the MMU to intercepts the page table-related operations of a process. When a native page table is created, the related mirror page table is also created.

- If occuring process write in the native space, mirror write instruction is then replicated in the mirror space by binary translation, and redundant data is written by the mirror instruction.

When memory failure occurs, error detection mechanism (e.g., ECC, healthlog and stresslog) notifies the host by invoking a machine check exception (MCE). We can modify MCE mechnaism to refuse to restart the whole system, the system quickly and effectively retrieves the corrupted data using the following steps:

- A new page is allocated to recover the data in the normal zone.

- Remap the new page: Corrupted PTE is rewritten and mapped to the new page that was just allocated.

- Data is copied from mva (mirror virtual address) to nva (native virtual address). After error recovery, the program continues to execute.

Implementing mirror memory in XV6

Currently, I implement mirror memory in XV6, where I modify the malloc() function to achieve the mirror reservation.

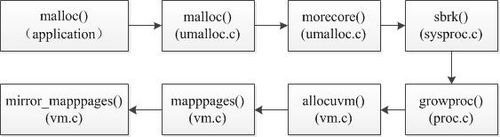

- Figure 3: Implement mirror memory in XV6

In Figure 3, we firstly revise the memory layout of XV6 system, and create a new API mirror_mappages() function (this function comes from the original function mappages()), during the last step, mirror_mappages() is called to create mirror page table. Through this, we can finish the PTE's creation.

However, not all PTEs from XV6 can be created through that function. For example, during the initialized period, the PTEs are not created by calling mmap(), but by fixing a physical address space to give a page table. After that, XV6 will forch cr3 to direct to that address. Therefore, we can modify the main.c file, where a descriptor "entrypgdir"can be added with mirror parameters. After these modifications, we can successfully create full mirror pages for XV6 system.

Source code of mirror memory in XV6

To view the source code please see:mirror memory in XV6

Write synchronization

Mirror instructions are added at compile time with static binary translation that reduces the performance overhead at run time. The mirrored instructions are inserted when OS loads the program from the disk. Because interrupts may incur certain side effects after mirrored instructions are inserted. If an interrupt happens between one native instruction and the following mirror instruction, for one, the data will not be replicated for a relatively long time. Another side effect can result from a multi-core environment – if two cores write to the same address through atomic instructions (the lock-prefix instructions instead of using other forms of locking, then a data race occurs in which the mirror instructions destroy the atomicity. We hope to change the compiling period to insearch mirror instruction process.

Using Code Cache to Recover Work State

Code Cache Workflow

The translation block (TB) is the basic unit for QEMU to process the translation (including both guest and host). TBs were converted to C code by DynGen and GCC (the GNU C compiler) converted the C code into host specific code. The issue with the procedure was that DynGen was tightly tied to GCC and created problems as when GCC evolved. To remove the tight coupling of the translator to GCC a new procedure was put in place; TCG.

The dynamic translation converts code as and when needed. The idea was to spend the maximum time executing the generated code that executing the code generation. Every time code is generated from the TB it is stored in the code cache before being executed. Most of the time the same TBs are required again and again, owing to what is called Locality Reference, so instead of re-generating the same code it is best to save it. And once the code cache is full, to make things simple, the entire code cache is flushed instead of using LRU algorithms.

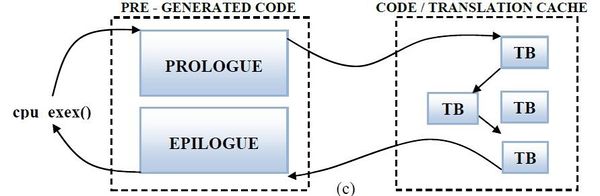

- Figure 4: Code Cache Workflow

Figure 4 shows the workflow of code cache, after the execution of one TB the execution directly jumps to the next TB without returning to the static code. The chaining of block happens when the Tb returns to the static code. Thus when TB1 returns (as there was no chaining) to static code the next TB, TB2, is found, generated and executed. When TB2 returns it is immediately chained to TB1. This makes sure that next time when TB1 is executed TB2 follows it without returning to the static code.

Record Code Cache State

From the above technology, we can infer that QEMU code cache stores QEMU working state of all translation modules. The translation module is the most import issues, if we hope to recover QEMU dynamic state. Thus, after recovering QEMU static state and dynamic memory, we hope to recover working state. To this end, recovering code cache state is the most direct way.

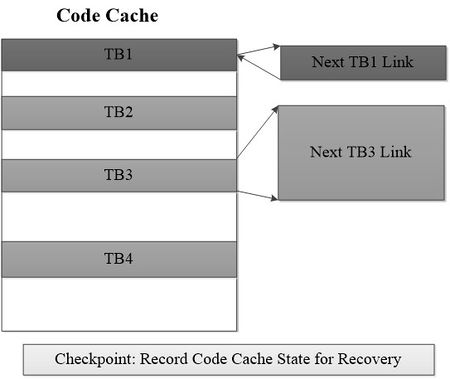

- Figure 5: Checkpoint to record code cache state

In Figure 5, for each checkpoint, we can record all information of TBs in code cache. The information includes the details of TB, the next TB of current TB chain in the code cache. After recovering static and dynamic data in the QEMU, we can reload all TBs from the TB chain according to the snapshot information. The advantages of record code cache information is: A checkpoint can record less information because our checkpoint only records which TBs in the code cache at a timestamp, rather than records all TBs in the code cache. These TBs could be reloaded from the mirror memory according to TB information.

COLO-based VM CheckPoint and Recovery

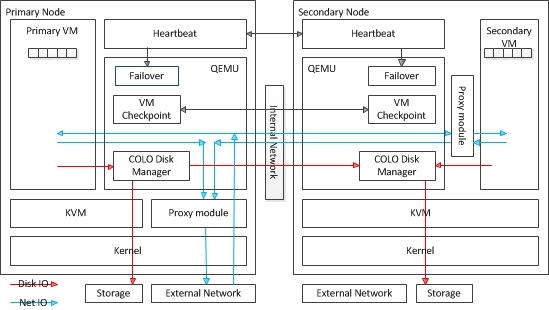

- Figure 6: Using Colo arhictecture to recover VM and its state

Shown in Figure 6, it consists of a pair of networked physical nodes: The primary node running the PVM, and the secondary node running the SVM to maintain a valid replica of the PVM. PVM and SVM execute in parallel and generate output of response packets for client requests according to the application semantics. The incoming packets from the client or external network are received by the primary node, and then forwarded to the secondary node, so that Both the PVM and the SVM are stimulated with the same requests.

COLO receives the outbound packets from both the PVM and SVM and compares them before allowing the output to be sent to clients. The SVM is qualified as a valid replica of the PVM, as long as it generates identical responses to all client requests. Once the differences in the outputs are detected between the PVM and SVM, COLO withholds transmission of the outbound packets until it has successfully synchronized the PVM state to the SVM.

COLO comes from my colleague's work when I was a Ph.D student. We can change their architecture for Uniserver purpose. For more COLO information please see COLO research wiki.

Another Mirror Memory Idea

We can backup mirror memory from the host to a guest OS which uses reliable domain. Therefore, we can use COLO-based approach to recover host memory through the socket/network (we can have further discussion about it).

Checkpoint

We hope to set an exception-driven backup. Assume that Healthlog or MCE log reports errors to the Linux kernel but the report does not trigger the interrupts, a handler will raise the backup functions to start backup memory data to the mirror memory. For code cache, we set checkpoint every 10 seconds (this timestamp could be estimated).

After QEMU occurs the exception and quit from the errors, we can use a checkpoint to recover it.

Next Week's Plan

- Talk with our team about the Uniserver wiki T5.1: Definition of hypervisor / OpenStack / OS driver interface, because I was confused with the basic requirements about some metrics: hypervisor without margins and with margins. Hope to acquire more details about that.

- Continue to study core mechanism of QEMU code management.

- Study the COLO-based backup for host memory, try to create the process-based mirror.

- Paper reading about fault-tolerance hypervisor in recent conferences (FAST, HPCA, VEE)